Efficient Model-Based Multi-Agent Mean-Field Reinforcement Learning

Abstract

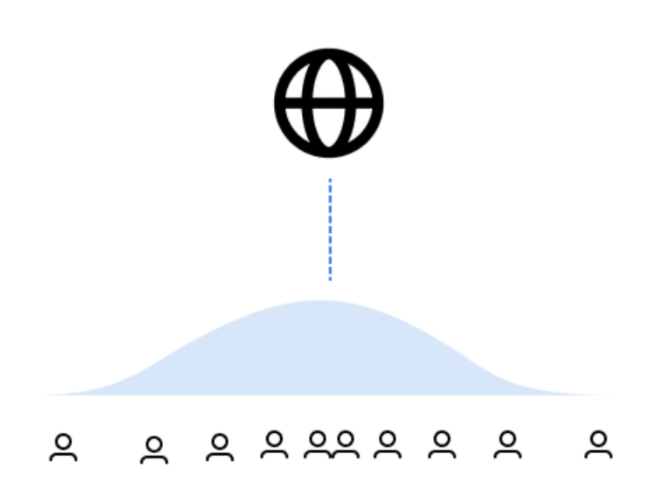

Learning in multi-agent systems is highly challenging due to the inherent complexity introduced by agents’ interactions. We tackle systems with a huge population of interacting agents (e.g., swarms) via Mean-Field Control (MFC). MFC considers an asymptotically infinite population of identical agents that aim to collaboratively maximize the collective reward. Specifically, we consider the case of unknown system dynamics where the goal is to simultaneously optimize for the rewards and learn from experience. We propose an efficient model-based reinforcement learning algorithm M3-UCRL that runs in episodes and provably solves this problem. M3-UCRL uses upper-confidence bounds to balance exploration and exploitation during policy learning. Our main theoretical contributions are the first general regret bounds for model-based RL for MFC, obtained via a novel mean-field type analysis. M3-UCRL can be instantiated with different models such as neural networks or Gaussian Processes, and effectively combined with neural network policy learning. We empirically demonstrate the convergence of M3-UCRL on the swarm motion problem of controlling an infinite population of agents seeking to maximize location-dependent reward and avoid congested areas.